NG-CAFE

Next Generation Cafe

NG-CAFE is a next genaration cafe, where a robotic agent plays the role of a waiter, managing orders and serving at tables.

The project considered simplified problems related to service robotics in an applicative context of support to the work

and it was aimed at developing an intelligent agent combining both deliberative and reactive aspects,

with the objective of driving the behaviour of a physical robotic agent able to (i) acquire orders from clients sit at tables,

(ii) bring them the ordered food and drinks, (iii) clean the tables after the meals, (iv) throw trash away.

-

NG-CAFE has been developed by D. Dell'Anna, M. Madeddu and E. Mensa

as lab project for the Artificial Intelligence and Laboratory course, with the supervision of Prof. Pietro Torasso

@ Department of Computer Science, University of Turin, 2014.

Assumptions

The agent is equipped with sensors and interpretative processes which are always correct (not ambiguous nor erroneous), however they have limited coverage. The environment is only partly observable and it is dynamic. Agent's actions are always executed successfully.

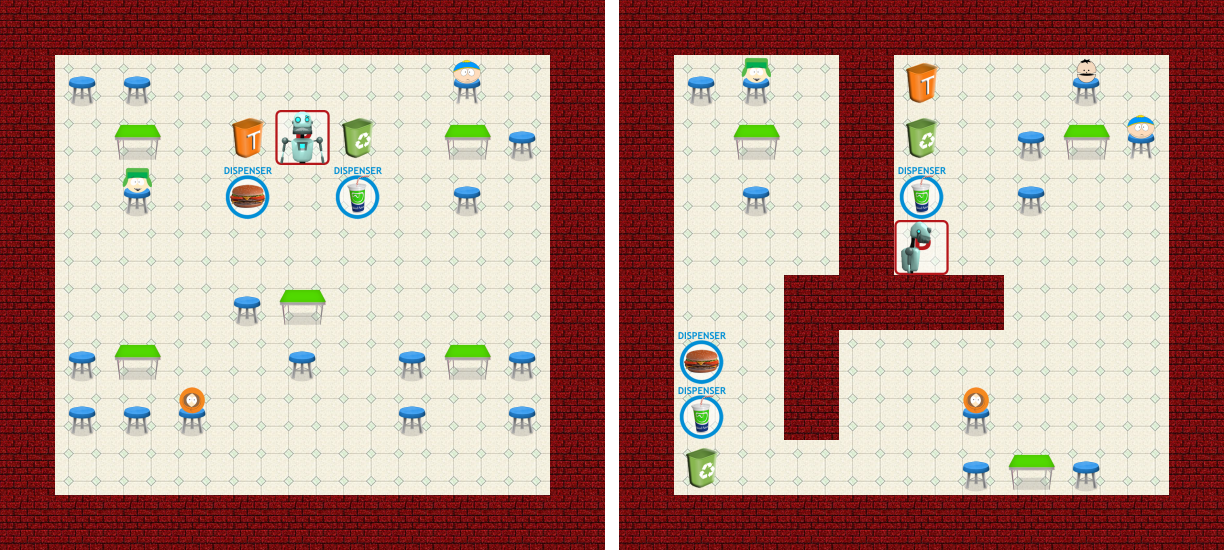

The environment

The cafe is equipped with: dispensers where the agent can pick food and drinks, trash baskets, a parking slot where the agent can reload the batteries. Clients can move in the cafe or sit on seats around a table and they can dynamically make orders.

Agent's capabilities

Prior knowledge about the environment: walls, tables, seats, dispensers and parking locations. The agent can move in the environment and perceive it within a specified radius around it. It can pick up and leave objects from/at dispensers, tables, trash baskets and receive new orders and messages from the clients.

CLIPS

The core of the agent is built in CLIPS, a rule-based programming language useful for creating expert systems.

The simulation

The agent is equipped with perceptual, deliberative and planning capabilities. It makes use of an embedded implementation of A* for path-finding, using Manhattan heuristic and of several strategies and heuristics for the deliberation tasks. A simplified environment module simulates the occurrence of events as well as time passing and penalties calculation.

Interface

CLIPS has been integrated in Java in order to ease the simulations. The user interface, provides live information about the current execution but also statistical information about previous simulations. All the simulation parameters can be directly managed from the interface.

Deliberation strategies

Seven different deliberation strategies have been implemented and compared:

- LIFO: the default CLIPS rule firing mechanism is exploited and the agent chooses the goal related to the most recent request.

- FIFO: the agent chooses the goal according to the arrival order.

- Random: the agent chooses the goal randomly among those pending.

- Distance: the agent chooses the goal that requires the minimum distance to travel for performing all the subtasks (calculated using Manhattan heuristic).

- Pure Penalty: the agent chooses the goal resulting in the minimum penalty (estimation made without considering the distance)

- Penalty on Distance (PoD): the agent chooses the goal resulting in the minimum ratio penalty/distance estimated.

- Full Utility: the agent chooses the goal resulting in the minimum ratio penalty/distance estimated, weighing penalties for each subtask according to the relative distance.

Experimentation

Statistics Panel

A fast execution modality allows to rapidly execute a simulation and generate statistics about the execution. Information about the goals execution timeline, the most crossed cells, the history of events and penalties stats are immediatly available.

-

Comparison Tool

A comparison tool allows to easily compare two or more simulations with different strategies and problems in terms of: general problem information, penalty trend in time and tables average waiting time.

-

7

deliberation strategies

7

environments:

3 maps (10x10 and 17x17) with 3 different leves of difficulty

30.000+

executions

For a complete experimental evaluation see related works.